Due to concerns about the potential military applications of the American Space Shuttle program, the Soviet Union developed a reusable spaceplane during the 1970s and '80s with similar capabilities. The program was called Buran, Russian for "Snowstorm," and you can read more about it here:

Buran

At one time the Space Shuttle was intended to replace every expendable launch vehicle in the United States' rocket stable and perform every government satellite launch by the time it was up to its full launch cadence. To handle the traffic, three launch pads were prepared: two at the Kennedy Space Center in Florida to handle eastbound launches to geosynchronous orbit and other low-inclination orbits, and one at Vandenberg Air Force Base in California to handle southbound launches to polar orbit. Since polar orbits cover all of Earth's land area they're preferred for reconnaissance satellites, allowing them to snoop on adversaries, frenemies, and outright enemies wherever they might roam. What delighted the White House and concerned the Kremlin was that the Space Shuttle had the capability to retrieve spacecraft as well as deploy them. In theory, a Shuttle could launch from Vandenberg at just the right moment to snatch a Soviet spy satellite over Antarctica, then return to land in California after a single orbit. Presumably the American president would then claim to know nothing about why a Soviet spacecraft just disappeared without a trace.

Diplomatic absurdity aside, the Shuttle's large payload bay and high reentry maneuverability (required to enable single-orbit aborts after polar launches) made such missions technically feasible. Cold War logic being what it was, the Soviet military establishment eventually decided that if a NATO power was going to have such a capability, the USSR had to have it, too. The Soviet space design bureaus were then told to copy the Shuttle as best they could, and so Buran was born.

At the time it was built, and possibly to this day, the Space Shuttle was the most analyzed engineered system ever built by human hands. Its mission requirements called for a significant amount of aerodynamic maneuverability and good handling qualities across speeds ranging from about 200 miles an hour at landing to over 17,000 miles an hour at the start of reentry. To guarantee that the vehicle could perform as intended, thousands of hours of wind tunnel tests were conducted at subsonic, transonic, supersonic, and hypersonic speeds across a range of gas densities and temperatures to simulate the full-scale Shuttle's launch and entry environments. Knowing that the Americans had done this, and wanting to save money wherever possible amid the stagnating communist economies of the post-Brezhnev years, the Soviets elected to just duplicate the shape of the American Shuttle rather than repeat any of this work. Given the obvious brilliance of the Soviet human spaceflight heritage, this is a bit of a disappointment.

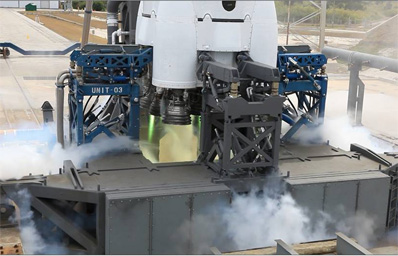

The most significant divergence between Buran and the Space Shuttle was its propulsion system. The American aerospace industry invested heavily in solid rocket motor technology during the Cold War to perfect its ballistic missiles, while the Soviets were mostly happy with the workings of liquid rocket engines. As a result, the American Space Shuttle leveraged big solid motor know-how by including two massive solid rocket boosters in the Space Shuttle's launch stack, while the Soviets used kerosene and oxygen-burning liquid boosters in their place. While liquid rocket engines tend to be more expensive than solids, this choice makes sense for a launch vehicle. Unlike ballistic missiles, launch vehicles don't need to be ready to fly at a moment's notice, so it's okay if it takes hours to fuel the vehicle before liftoff. Since liquids can launch more payload per pound of propellant and can be shut down benignly in the event of launch trouble (once lit, it's very difficult to stop solid rocket motor combustion), they're a more appropriate choice for a reusable launch vehicle. Since the hydrogen-powered main engines were mounted to the core propellant tank on the Buran stack, it had the flexibility to launch large payloads (like, say, space laser battlestations) as the semi-reusable launch vehicle Energia.

Soviet industry was strained to the breaking point by the catch-up defensive programs of the 1980s. By the end of the decade, the Communist Bloc was bankrupt and the west was declaring victory in the Cold War. Buran was one of the most expensive programs the USSR ever embarked upon, even with all the cost-cutting and corner-cutting measures in its development, and it surely played a significant role in the fall of the Soviet Union. In the end it only flew once, unmanned, before being mothballed and destroyed when the hangar housing Buran collapsed in 2002. But fly it did:

.jpg)